SMS Blog

Leveraging EKS Pod Identity on AWS EKS (Part 1 of 3): Securing Application Access

Welcome to the first post in our three-part series on mastering AWS access management in EKS. When you deploy applications on Amazon EKS, a critical challenge is granting your workloads the right level of access to other AWS services like S3, DynamoDB, or SQS. How do you provide these permissions securely, without exposing credentials or granting overly broad access?

This series will guide you through the modern, secure standard for this process: EKS Pod Identity. In this first part, we will explore the evolution of AWS access for pods, demonstrate why EKS Pod Identity is the superior choice from a security perspective, and provide a complete guide on how to implement it for your applications using Terraform. In Part 2, we will extend this powerful pattern to manage the operational add-ons that run your cluster, and in Part 3, we’ll tackle the complex challenge of cross-account access.

The Old Ways: Less-Than-Ideal Methods

Before we jump into the recommended approach, let’s look at two common but flawed methods for granting AWS access to EKS workloads.

Option 1: IAM User Keys

The most straightforward, yet least secure, method is to create an IAM User, generate an access key and secret key, and store them as Kubernetes secrets. Your application pods would then mount these secrets as environment variables.

Why is this a bad idea?

- Static Credentials: These keys are long-lived and static. If they are compromised, an attacker has persistent access to your AWS resources until you manually rotate them.

- High Risk of Exposure: Storing credentials directly in your cluster, even as secrets, increases the attack surface. Anyone with access to the cluster with sufficient Kubernetes permissions could potentially retrieve these keys.

- Auditing and Management Hell: Managing the rotation and lifecycle of these static keys across multiple applications and environments is a significant operational burden.

Option 2: EC2 Instance Roles

A significant improvement is to leverage the IAM role attached to the EKS worker nodes (the EC2 instances). By adding permissions to the instance role, any pod running on that node can inherit those permissions and use the AWS SDK to make API calls without needing static credentials.

Why is this better, but still not great?

This approach eliminates static credentials, which is a major win. However, it violates the principle of least privilege. Every single pod running on a given node gets the exact same set of permissions. If a node hosts a dozen different microservices, they all share the same role. A vulnerability in one application could be exploited to abuse the permissions granted to all other applications on that node. You have no granular, pod-level control.

The Modern Solution: EKS Pod Identity

EKS Pod Identity is AWS’s native solution to this problem, providing the ability to associate IAM roles with individual Kubernetes service accounts. This allows you to scope permissions directly to the pods that need them, achieving true least-privilege access.

Why is EKS Pod Identity the best option?

- Security First: It eliminates the need for static credentials and overly permissive instance roles. Each pod gets precisely the permissions it requires and nothing more.

- Fine-Grained Control: Permissions are tied to a Kubernetes Service Account, not the underlying node. This means you can have multiple pods on the same node, each with its own distinct IAM role and set of permissions.

- Auditing and Compliance: Access is managed through IAM roles, which are fully auditable in AWS CloudTrail. You can easily see which pod (via its service account) accessed which AWS resource and when.

How EKS Pod Identity Works

So, how does this all work under the hood? The process is an elegant and secure handshake between Kubernetes and AWS IAM, happening seamlessly in the background every time your pod needs to access an AWS service.

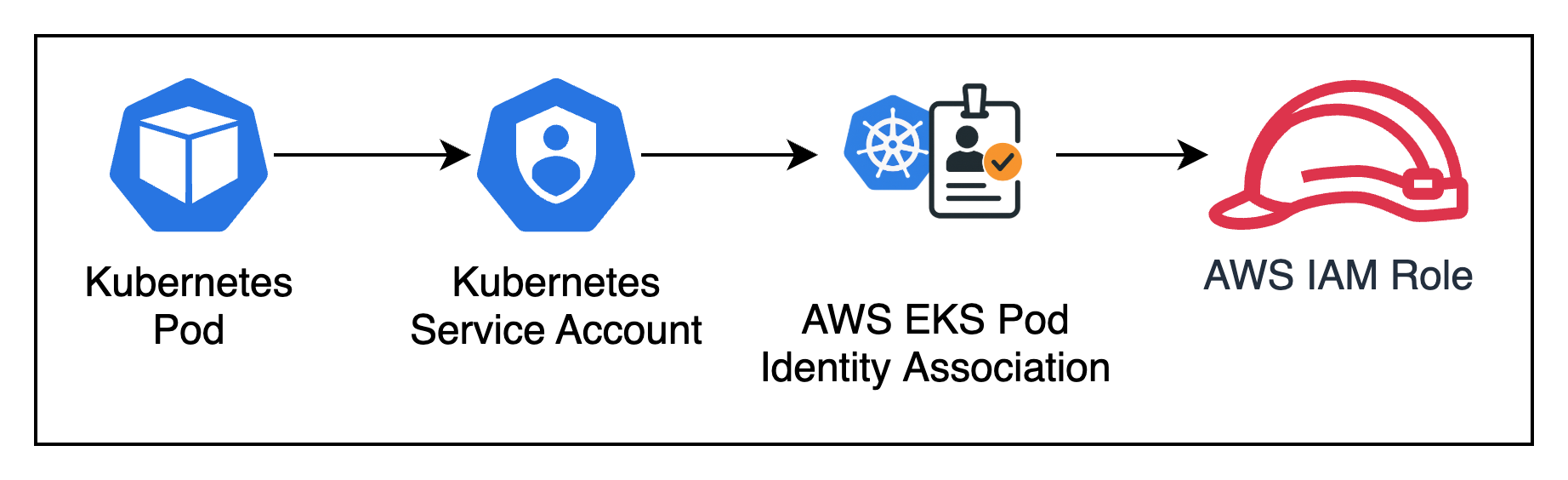

However, before we go through how Pod Identity works it is important to understand how the resources running in Kubernetes are connected to AWS IAM Roles when using EKS Pod Identity. To put it simply, each Kubernetes Pod has an associated Kubernetes Service Account. An AWS resource called an EKS Pod Identity Association connects a Kubernetes Service Account with an AWS IAM Role.

Figure 1: Connecting Kubernetes resources to AWS resources

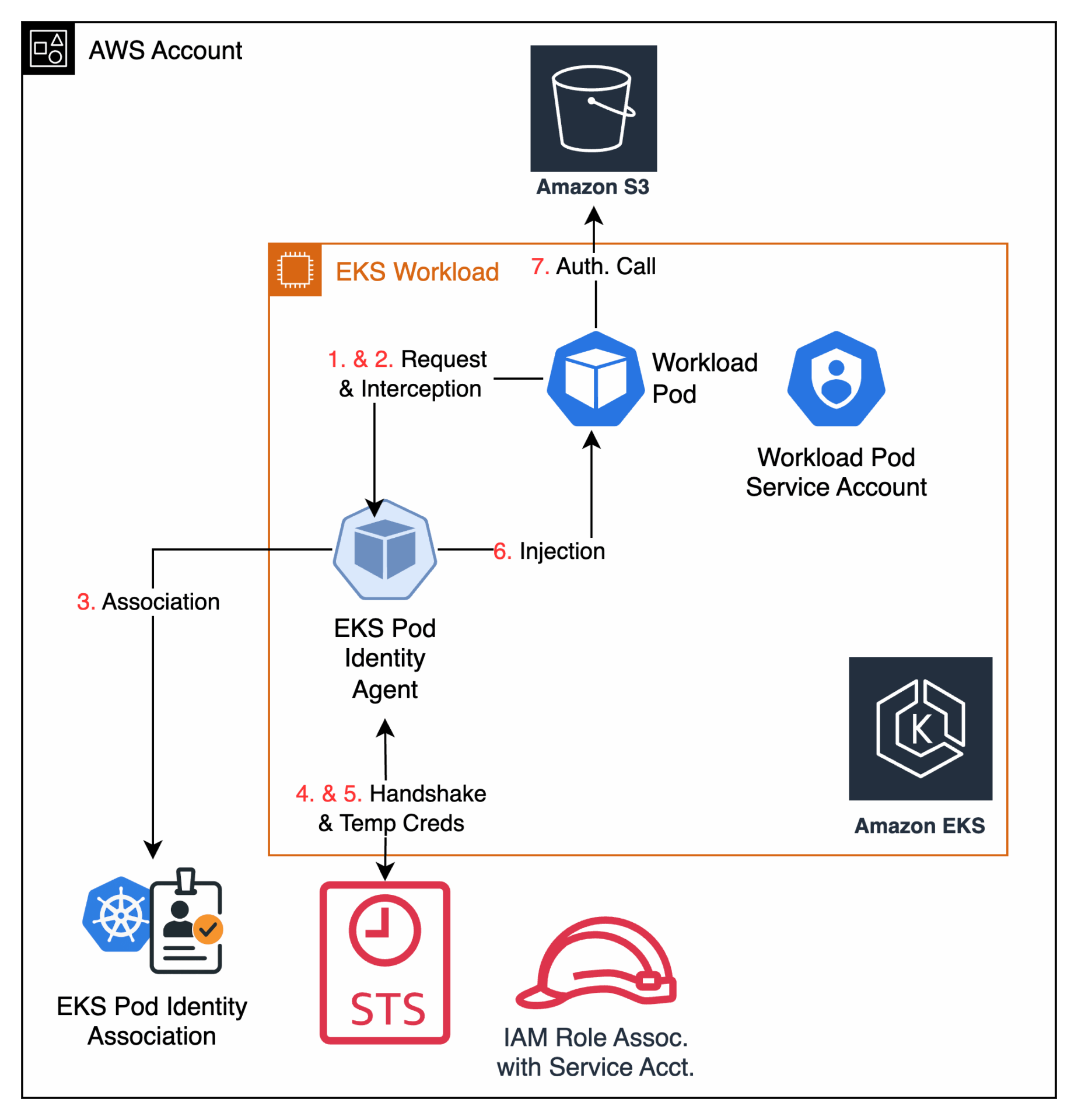

Now let’s walk through how EKS Pod Identity works step by step.

- The Request: It all begins inside your cluster. Your application, running in a Pod, needs to read an object from an S3 bucket. It uses the standard AWS SDK to make the call, completely unaware of the credential magic that’s about to happen. The Pod is configured to use a specific Kubernetes Service Account (e.g.,

my-app-sa), which acts as its identity. - The Interception: Before that request can leave the worker node, it’s intercepted by the EKS Pod Identity Agent. This agent is a lightweight component that runs on every node in your cluster. It inspects the request and sees that it came from a pod using the

my-app-saService Account. - The Association: The agent now consults the EKS Pod Identity Association that you created in AWS. This association is the critical bridge, acting as a map that explicitly links the Kubernetes Service Account (

my-app-sain themy-app-namespace) to a specific IAM Role. - The “Assume Role” Handshake: Acting as a secure proxy, the agent makes a call to the AWS Security Token Service (STS) on the pod’s behalf. It essentially says, “I have a request from a pod whose identity is authorized to assume this specific IAM Role. Please grant me credentials.”

- The Temporary Credentials: STS validates the request. It checks the IAM Role’s trust policy, sees that it trusts the EKS Pod Identity service (

pods.eks.amazonaws.com), and confirms the association is valid. Satisfied, STS generates a set of temporary, short-lived security credentials (an access key, a secret key, and a session token) and sends them back to the agent. - The Secure Injection: The agent securely receives these temporary credentials and injects them into the application pod’s environment.

- The Authenticated Call: The AWS SDK in your pod, which was patiently waiting for credentials, automatically discovers and uses them to complete its original, now-authenticated request to Amazon S3. The application gets its data, and not a single static access key was ever stored in your cluster.

Figure 2: How EKS Pod Identity Works

This entire process happens in milliseconds and is completely transparent to your application. The result is a seamless, highly secure authentication flow that enforces the principle of least privilege right down to the individual pod.

Implementing EKS Pod Identity with Terraform

Now for the practical part. Let’s configure EKS Pod Identity from the ground up using Terraform. The process involves three main steps:

- Configuring the EKS Node Group for EC2 Instance Metadata Service v2 (IMDSv2).

- Deploying the EKS Pod Identity Add-On.

- Creating the Pod Identity Association and Kubernetes resources.

Step 1: Configure the EKS Node Group for IMDSv2

EKS Pod Identity relies on the EC2 Instance Metadata Service (IMDS). To ensure the secure proxying of credentials, the worker nodes must be configured to support IMDSv2 and have their hop limit increased. The Pod Identity agent running on the node acts as a proxy, which adds a hop to the metadata request. Therefore, the put-response-hop-limit for the instance metadata endpoint must be set to at least two.

This is done by creating an IAM Role for the nodes, attaching the required baseline permissions, creating an aws_launch_template, and referencing both in your aws_eks_node_group. The following Terraform code snippet demonstrates how to configure this:

# Data source to get the latest EKS-optimized AMI for a specific Kubernetes version

data "aws_ami" "eks_optimized_al2" {

filter {

name = "name"

values = ["amazon-eks-node-${var.kubernetes_version}-v*"]

}

most_recent = true

owners = ["amazon"]

}

# IAM Role for the EKS Worker Nodes

resource "aws_iam_role" "node" {

name = "eks-node-group-role"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

# Attach necessary policies to the Node Group IAM Role

resource "aws_iam_role_policy_attachment" "amazon_eks_worker_node_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.node.name

}

resource "aws_iam_role_policy_attachment" "amazon_eks_cni_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.node.name

}

resource "aws_iam_role_policy_attachment" "amazon_ec2_container_registry_read_only" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.node.name

}

# Create a Launch Template with the correct metadata options

resource "aws_launch_template" "eks_nodes" {

name_prefix = "main-nodes-lt-"

description = "Launch template for EKS worker nodes with Pod Identity"

image_id = data.aws_ami.eks_optimized_al2.id

instance_type = "t3.medium"

# EKS Pod Identity Requirement:

# The hop limit must be increased to at least 2

# to allow the pod identity agent to proxy credentials.

metadata_options {

http_tokens = "required" # Enforces IMDSv2

http_put_response_hop_limit = 2 # Required for Pod Identity

}

tag_specifications {

resource_type = "instance"

tags = {

Name = "eks-worker-node"

}

}

}

# Reference the Launch Template from the EKS Node Group

resource "aws_eks_node_group" "main" {

cluster_name = aws_eks_cluster.main.name

node_group_name = "main-nodes"

node_role_arn = aws_iam_role.node.arn

subnet_ids = var.private_subnets

scaling_config {

desired_size = 2

min_size = 1

max_size = 3

}

# Reference the launch template created above

launch_template {

id = aws_launch_template.eks_nodes.id

version = aws_launch_template.eks_nodes.latest_version

}

# Ensure the IAM role and policies are created before the node group

depends_on = [

aws_iam_role_policy_attachment.amazon_eks_worker_node_policy,

aws_iam_role_policy_attachment.amazon_eks_cni_policy,

aws_iam_role_policy_attachment.amazon_ec2_container_registry_read_only,

]

}

A Note on EKS Upgrades

A key consideration when using a custom aws_launch_template is how it impacts the EKS node group upgrade process. By specifying an image_id directly in the launch template, you are pinning the node group to a specific Amazon Machine Image (AMI). When it’s time to upgrade your Kubernetes version, you will need to manually update this image_id (for example, by updating the kubernetes_version variable used by the aws_ami data source) and create a new version of the launch template. If you are managing multiple EKS clusters and want to maintain consistency across all of them then you may need to consider another approach like specifying the AMI ID manually.

This contrasts with managing the AMI directly through the release_version attribute on the aws_eks_node_group resource itself (when not using a custom launch template). The release_version method simplifies upgrades, as EKS handles selecting the correct AMI for you. However, since EKS Pod Identity requires modifying the launch template’s metadata options (http_put_response_hop_limit), using a custom launch template is a necessary trade-off for the enhanced security it provides.

Step 2: Deploy the EKS Pod Identity Add-On

Next, you need to enable the eks-pod-identity-agent on your cluster. This add-on runs a DaemonSet on each node that intercepts credential requests from your pods and exchanges them for temporary credentials from the associated IAM role.

You can manage this easily with the aws_eks_addon resource:

resource "aws_eks_addon" "pod_identity" {

cluster_name = aws_eks_cluster.main.name

addon_name = "eks-pod-identity-agent"

}

Step 3: Create the Pod Identity Association

This is where the magic happens. You create an aws_eks_pod_identity_association to link an IAM role to a Kubernetes service account.

First, define the IAM Role and Policy that your pod will assume. This policy should grant only the permissions the application needs. For this example, we’ll grant read-only access to a specific S3 bucket.

# IAM Policy for the Pod

resource "aws_iam_policy" "s3_reader" {

name = "my-app-s3-reader-policy"

description = "Allows read-only access to a specific S3 bucket"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"s3:GetObject",

"s3:ListBucket"

]

Effect = "Allow"

Resource = [

"arn:aws:s3:::my-app-bucket",

"arn:aws:s3:::my-app-bucket/*"

]

},

]

})

}

# IAM Role the Pod will assume

resource "aws_iam_role" "pod_identity" {

name = "my-app-pod-identity-role"

# Trust policy that allows the EKS Pod Identity agent to assume this role

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Principal = {

Service = "pods.eks.amazonaws.com"

}

Action = "sts:AssumeRole"

},

]

})

}

# Attach the policy to the role

resource "aws_iam_role_policy_attachment" "pod_identity" {

role = aws_iam_role.pod_identity.name

policy_arn = aws_iam_policy.s3_reader.arn

}

Now, create the association that ties this IAM role to the specific Kubernetes namespace and service account name you intend to use.

resource "aws_eks_pod_identity_association" "main" {

cluster_name = aws_eks_cluster.main.name

namespace = "my-app-namespace"

service_account = "my-app-sa"

role_arn = aws_iam_role.pod_identity.arn

# Ensure the addon is deployed before creating the association

depends_on = [aws_eks_addon.pod_identity]

}

Finally, you need to create the Kubernetes resources themselves: the namespace, the service account, and the pod. You can manage these directly with the Terraform Kubernetes provider. The service account doesn’t need any special annotations; the association is handled entirely on the AWS side.

# Assumes you have the Kubernetes provider configured

# provider "kubernetes" { ... }

resource "kubernetes_namespace" "app" {

metadata {

name = "my-app-namespace"

}

}

resource "kubernetes_service_account" "app" {

metadata {

name = "my-app-sa"

namespace = kubernetes_namespace.app.metadata[0].name

}

# No special annotations are needed on the service account itself.

}

# Example Pod that uses the Service Account

resource "kubernetes_pod" "app_pod" {

metadata {

name = "my-app-pod"

namespace = kubernetes_namespace.app.metadata[0].name

}

spec {

service_account_name = kubernetes_service_account.app.metadata[0].name

container {

image = "public.ecr.aws/aws-cli/aws-cli:latest"

name = "my-app-container"

# A simple command to test S3 access.

# Replace 'my-app-bucket' with your actual bucket name.

command = ["/bin/sh", "-c", "aws s3 ls s3://my-app-bucket && sleep 3600"]

}

}

depends_on = [kubernetes_service_account.app]

}

With this configuration, the my-app-pod will be deployed into the my-app-namespace and will use the my-app-sa service account. Because of the aws_eks_pod_identity_association you created earlier, this pod will automatically receive temporary credentials with the permissions defined in the my-app-pod-identity-role. Your application’s AWS SDK (or in this case, the AWS CLI) will handle the rest seamlessly.

Conclusion and Next Steps

Granting AWS permissions to EKS workloads doesn’t have to be a security risk. By moving away from static credentials and overly permissive instance roles, you can significantly harden your security posture. EKS Pod Identity provides a robust, scalable, and secure framework for managing AWS access at a granular, application-specific level.

Now that we have established a secure foundation for our applications, how do we handle the operational software that powers our cluster? In Part 2 of this series, “Cluster Management“, and we will show you how to apply this same EKS Pod Identity pattern to EKS Add-Ons, creating a unified and simple permissions model for your entire cluster.