SMS Blog

Illumio PCE Automation

Problem

There is a need to build, test, and dismantle a 4-node Illumio Core implementation that complies with the Security Technical Implementation Guide (STIG).

Solution

Utilize a combination of Packer, Terragrunt, and Ansible to automate the image build process and the installation of Illumio Core. This will be executed within a VMware vSphere environment.

Why?

In order to thoroughly test the product, you will need to “break it,” for lack of a better term. A Clickops install from start to finish takes between 2-3 hours to complete. Repeating this process every time a rebuild is needed would result in significant time wastage.

“ClickOps” is a term used to describe a method of managing and deploying IT infrastructure and applications primarily through graphical user interfaces (GUIs) or point-and-click tools, rather than through code or command-line interfaces (CLIs).

What is an Illumio Core?

Illumio Core is a security platform designed to provide comprehensive visibility and segmentation for enterprise networks. It enables organizations to create micro-segmentation policies that control and monitor network traffic between workloads, regardless of where they are located—whether in data centers, public clouds, or hybrid environments. The product empowers organizations to strengthen their security posture by implementing granular network segmentation and enforcing consistent security policies across their entire infrastructure, reducing the risk of data breaches and cyber attacks. It is currently supported to run on Centos, Red Hat and Oracle Linux. For a good explanation, see Illumio Micro Segmentation.

What are the tools that are used?

The following tools will need to be installed. I have tested Ubuntu/amd64 and Mac M2.

You will also need the following Ansible modules installed:

pip3 install ansible-core ansible-galaxy collection install community.general ansible-galaxy collection install ansible.posix

Packer is used to build the base image templates with the STIG applied. Terragrunt orchestrates the installation of the 4 nodes using these templates, and Ansible installs the Illumio Core software.

The Nitty Gritty

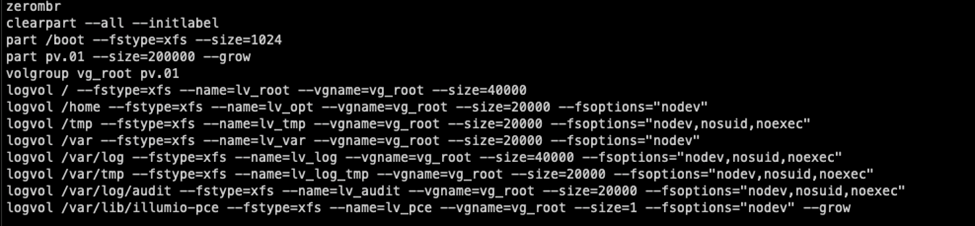

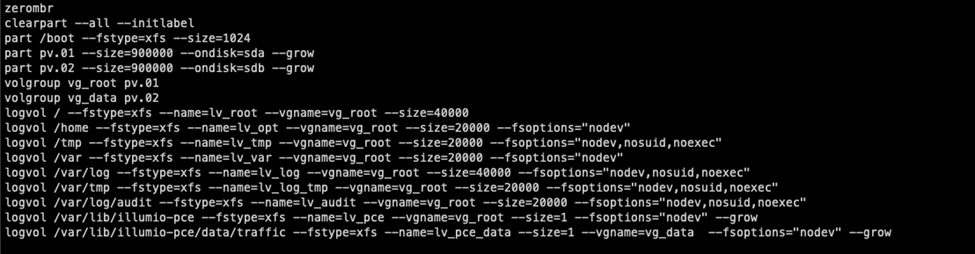

The Illumio Core software is called Policy Compute Engine (PCE). Illumio uses the concept of a Single Node Cluster (SNC) or Multi Node Cluster (MNC). They mean exactly what is says, one node or multiple nodes. We will be installing an MNC PCE so we need to prepare the images accordingly. The MNC consists of Core and Data nodes, each with different storage/partition requirements. We need to account for these as well as the STIG partition requirements. This link provides the details of the partitions PCE Storage Device Partitions. Below is a breakdown of what we will use for partitions for both the Core and the Data Nodes.

Core Nodes – (1) 200GB disk

| Partition | Disk Size |

| / | 40G |

| /home | 20G |

| /tmp | 20G |

| /var | 20G |

| /var/log | 40G |

| /var/tmp | 20G |

| /var/log/audit | 20G |

| /var/lib/illumio-pce | The remainder of the disk |

Data Nodes – (2) 1TB disks

| Partition | Disk Size |

| / | 40G |

| /home | 20G |

| /tmp | 20G |

| /var | 20G |

| /var/log | 40G |

| /var/tmp | 20G |

| /var/log/audit | 20G |

| /var/lib/Illumio-pce | The remainder of the first disk |

| /var/lib/Illumio-pce/data/traffic | The entire 1TB second disk |

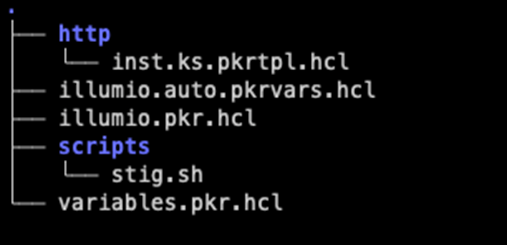

You can view this link for the details of the Packer setup Packer Repo. This repo is a generic Packer setup for a STIG Red Hat image on VMware, to facilitate the partition scheme incorporating Illumio PCE requirements (see below).

There are plenty of blogs and websites with detailed information about Packer, ex. SMS Blog, so I won’t go into too much detail about it. You will need to make your changes and then run it. I like to run it in debug mode so I can see what is going on in case everything doesn’t go as planned.

Core Nodes – snippet from http/inst.pkr.hcl – Detailing the partitioning setup.

Data Nodes – snippet from http/inst.pkr.hcl – Detailing the partitioning setup.

Here is a breakdown of the Packer directory.

- http/inst.ks.pkrtpl.hcl = Red Hat Kickstart file – Kickstart Reference

- scripts/stig.sh = bash script with STIG remediations – RHEL 8 STIG Repo

- illumio.auto.pkrvars.hcl = values for the variables

- illumio.pkr.hcl = Main Packer definition file

- variables.pkr.hcl = Variable definition file

Here are the commands needed to make the magic happen.

packer init . packer validate . PACKER_LOG=1 packer build --force

This will create a template within vSphere that will be STIG’d and you can now reference in Terragrunt.

Terragrunt

Now that we have our template established, now we need to deploy the nodes that will be used to install the PCE software.

Clone the following repo VMware vSphere Template. Below is the output of “main.tf”

resource "vsphere_virtual_machine" "nodes" {

for_each = var.nodes

name = each.value.name

resource_pool_id = data.vsphere_resource_pool.pool.id

datastore_id = data.vsphere_datastore.datastore.id

folder = var.vsphere_folder

guest_id = each.value.guest_id

enable_disk_uuid = var.disk_uuid

num_cpus = each.value.cpu

memory = each.value.memory

disk {

label = var.vm_linux_disk_name

size = each.value.disk_size_0

unit_number = var.first_disk_unit_number

}

dynamic "disk" {

for_each = can(each.value["disk_size_1"]) ? [1] : []

content {

label = var.vm_second_linux_disk_name

unit_number = var.second_disk_unit_number

size = each.value.disk_size_1

}

}

network_interface {

network_id = data.vsphere_network.network.id

}

clone {

template_uuid = local.os_map[each.value.clone_template]

}

extra_config = {

"guestinfo.metadata" = base64encode(templatefile("${path.module}/scripts/bootstrap_metadata.yaml", {

ip_address = each.value.ip_address

netmask = each.value.netmask

gateway = each.value.gateway

dns_server = each.value.dns_server

hostname = each.value.name

}))

"guestinfo.metadata.encoding" = var.encoding

"guestinfo.userdata" = base64encode(templatefile("${path.module}/scripts/bootstrap_userdata.yaml", {

script = file("${path.module}/userdata.txt")

root_passwd = random_password.root_passwd.result

hashed_passwd = htpasswd_password.user.sha512

user = var.user_name

ssh_keys = var.ssh_keys

}))

"guestinfo.userdata.encoding" = var.encoding

}

}

Let’s take a look at the code.

This configuration defines a vsphere_virtual_machine resource block that iterates over a collection of nodes, creating virtual machines with specified configurations such as name, CPU, memory, disk, network interface, cloning from a template, and additional guest configuration. This is a very basic configuration that gives you some flexibility using the “extra_config” section to do a deployment incorporating cloud-init.

Snippet from the Packer repo that makes this possible.

cat <<EOF >>/etc/cloud/cloud.cfg ssh_pwauth: true EOF sudo ln -s /usr/local/bin/cloud-init /usr/bin/cloud-init for svc in cloud-init-local.service cloud-init.service cloud-config.service cloud-final.service; do sudo systemctl enable $svc sudo systemctl start $svc done cloud-init init --local cloud-init init cloud-init modules --mode=config cloud-init modules --mode=final cloud-init clean --logs --machine-id --reboot

The top line adding the user to /etc/sudoers w/o password goes against the STIG but helps facilitate faster deployment of the Ansible-playbook and avoids the need to put passwords into the code. So you will need to remove that line once complete with the full deployment.

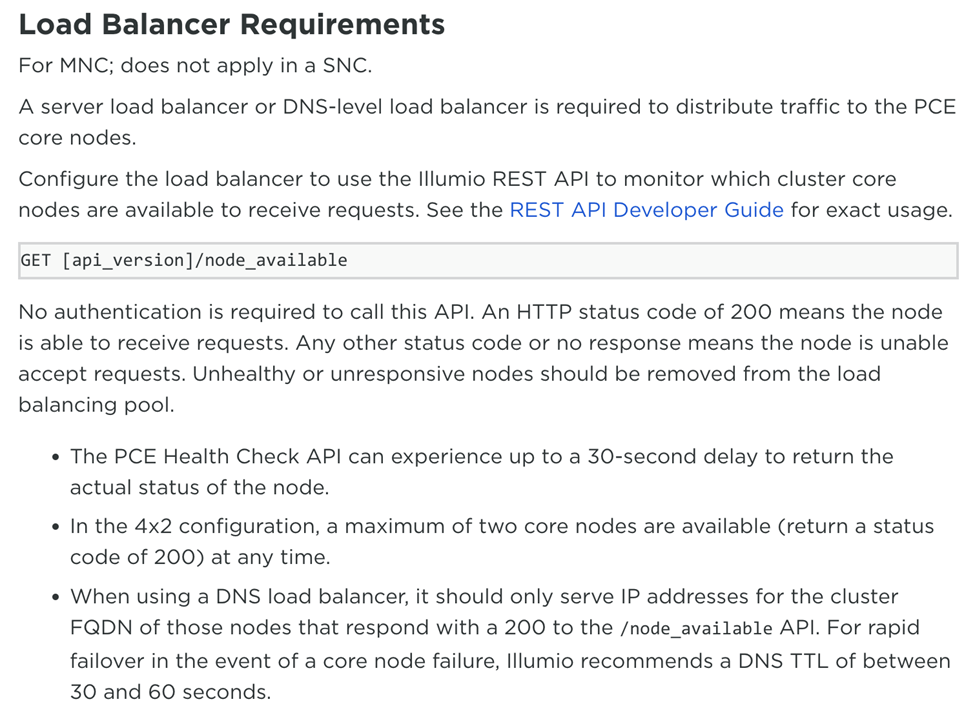

If you take a look in the examples/terragrunt folder, there is a ‘terragrunt.hcl’ that defines all the necessary values for the installation. It is an example, so you will need to modify it to fit your environment. In that example, there are 2 nodes labeled ‘lb1’ and ‘lb2’, which you will probably not need if you have a load balancer. If you don’t have a load balancer, you can create 2 Red Hat nodes and set the “create_lb” variable to true. This will install haproxy and keepalived on each node and turn them into an HA pair that will load balance the PCE traffic. Below is the excerpt from Illumio talking about the Load Balancer requirement; this is only for MNC.

The full list of requirements can be found here PCE Requirements. The biggest requirement is probably DNS, so make sure you fulfill that before you move on.

Example terragrunt.hcl for node deployment:

terraform {

source = "../..//"

}

generate "provider" {

path = "provider.tf"

if_exists = "overwrite"

contents = <<EOF

terraform {

required_providers {

vsphere = {

source = "hashicorp/vsphere"

version = "~> 2.4"

}

random = {

source = "hashicorp/random"

version = "~> 3.0"

}

htpasswd = {

source = "loafoe/htpasswd"

}

}

}

EOF

}

inputs = {

vsphere_datacenter = "dc1"

vsphere_folder = "Illumio"

vsphere_network = "v100"

vsphere_cluster = "Compute"

vsphere_datastore = "vsanDatastore"

ssh_keys = ["ssh-rsa AAAAB...."]

user_name = "pceadmin"

nodes = {

core0 = {

name = "pce-core0.examplenet.local.lan"

ip_address = "1.1.1.10"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 200

cpu = 8

memory = 32768

clone_template = "pce_core"

guest_id = "rhel8_64Guest"

},

core1 = {

name = "pce-core1.examplenet.local.lan"

ip_address = "1.1.1.11"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 200

cpu = 8

memory = 32768

clone_template = "pce_core"

guest_id = "rhel8_64Guest"

},

data0 = {

name = "pce-data0.examplenet.local.lan"

ip_address = "1.1.1.12"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 500

cpu = 8

memory = 32768

disk_size_1 = 500

clone_template = "pce_data"

guest_id = "rhel8_64Guest"

},

data1 = {

name = "pce-data1.examplenet.local.lan"

ip_address = "1.1.1.13"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 500

cpu = 8

memory = 32768

disk_size_1 = 500

clone_template = "pce_data"

guest_id = "rhel8_64Guest"

},

lb1 = {

name = "lb1.examplenet.local.lan"

ip_address = "1.1.1.14"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 200

cpu = 2

memory = 4096

clone_template = "lb"

guest_id = "rhel8_64Guest"

}

lb2 = {

name = "lb2.examplenet.local.lan"

ip_address = "1.1.1.15"

netmask = "24"

gateway = "1.1.1.1"

dns_server = "1.1.1.1"

disk_size_0 = 200

cpu = 2

memory = 4096

clone_template = "lb"

guest_id = "rhel8_64Guest"

}

}

}

Then apply Terragrunt.

terragrunt init -upgrade terragrunt plan terragrunt apply

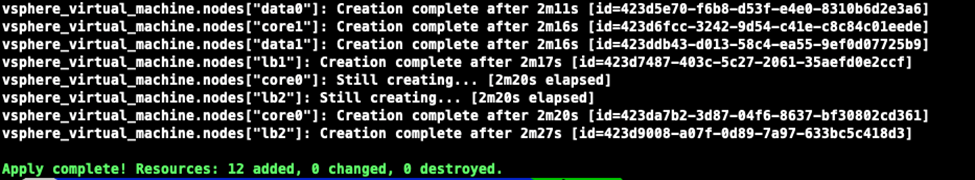

As you can see from above, the nodes should be created pretty quickly.

At this point the nodes should be ready and you should be able to login to each one with the username you defined in your terragrunt.hcl

Now we are ready to do the Illumio PCE install.

Here is the link to the GitHub repo Illumio PCE Ansible.

A little background on this setup. Illumio has an example playbook found here Illumio Ansible Playbook. This was a great start, but it was quite a few PCE versions behind and needed some TLC. Also, some flexibility with certs, load balancer and custom configurations to accommodate a STIG’d operating system, (See Appendix). I turned it into Terragrunt code so it can be deployed repeatable with some variable changes.

Below is an example of the terragrunt.hcl for this repo.

terraform {

source = "../..//"

}

inputs = {

ansible_ssh_user = "exampleuser"

create_certificates = false

create_lb = true

pce_rpm_name = "illumio-pce-23.2.20-161.el8.x86_64.rpm"

pce_ui_rpm_name = "illumio-pce-ui-23.2.20.UI1-192.x86_64.rpm"

pce_org_name = "Example LAB"

pce_admin_user = "example@gmail.com"

pce_admin_fullname = " example"

lb_vip = {

"ip_address" = "1.1.1.20"

"host_name" = "pce.example.com"

}

pce_lb_nodes = {

"pce-lb1.examplenet.local.lan" = "1.1.1.14"

"pce-lb2.examplenet.local.lan" = "1.1.1.15"

}

pce_core_nodes = {

"pce-core0.examplenet.local.lan" = "1.1.1.10"

"pce-core1.examplenet.local.lan" = "1.1.1.11"

}

pce_data_nodes = {

"pce-data0.examplenet.local.lan" = "1.1.1.12"

"pce-data1.examplenet.local.lan" = "1.1.1.13"

}

}

As you can see above there aren’t many values that you need to get this to work.

Let’s go through the explanation of the options – full list of options can be found in the README in the above repo.

ansible_ssh_user = user you defined in the deployment of the nodes

create_certificates = there is a certiicate requirement see link - Certificate, if this is false you will nee to put your certs in the ‘certs’ folder

create_lb = As stated above if you have a Load Balancer make this false

pce_rpm_name = You will have to provide your own packages from the support portal

pce_ui_rpm_name = Same as above and put in the ‘packages’ folder

pce_org_name = This is a generic name of the PCE Organization

pce_admin_user = This is an email address for the first user

pce_admin_fullname = The full name of the first user

lb_vip = {

"ip_address" = This is the ip address of the LB vip whether you are creating a LB or not

"host_name" = This is the hostname of the LB vip whether you are creating a LB or not

}

pce_lb_nodes = {

hostname of first LB node = IP address of first LB node if "create_lb" is true

hostame of second LB node = IP address of second LB node if "create_lb" is true

}

pce_core_nodes = {

hostname of one core node = IP address of core node

hostname of second core node = IP address of core node

}

pce_data_nodes = {

hostname of one data node = IP address of data node

hostname of second data node = IP address of data node

}

Just like the repo above you just need to set your values accordingly and then:

terragrunt init terragrunt plan terragrunt apply

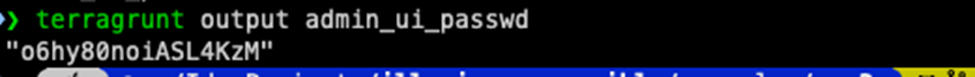

As you can see in just less than 15 minutes the apply has finished, all you need to do is run:

terragrunt output admin_ui_password

That will output the password for the user configured in your terragrunt.hcl

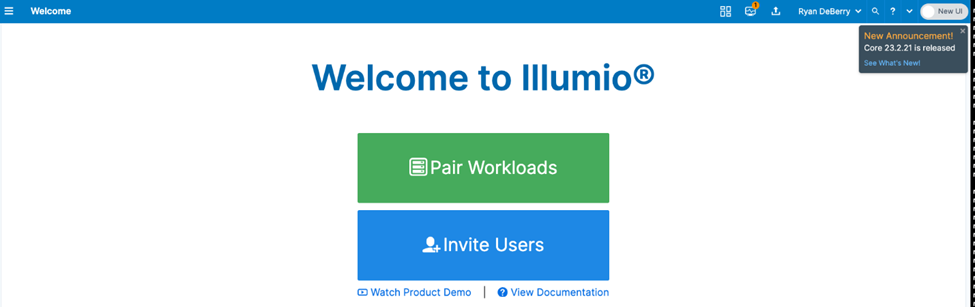

Browse to your PCE url, ex. ‘https://pce.example.com’

Enter in the user creds and you are done.

In summary, by spending the time to set this up, we have been able to drastically reduce the amount of time it takes to deploy the solution in any customers VMware environment. In a matter of 20 minutes you have a fully installed Illumio PCE cluster.

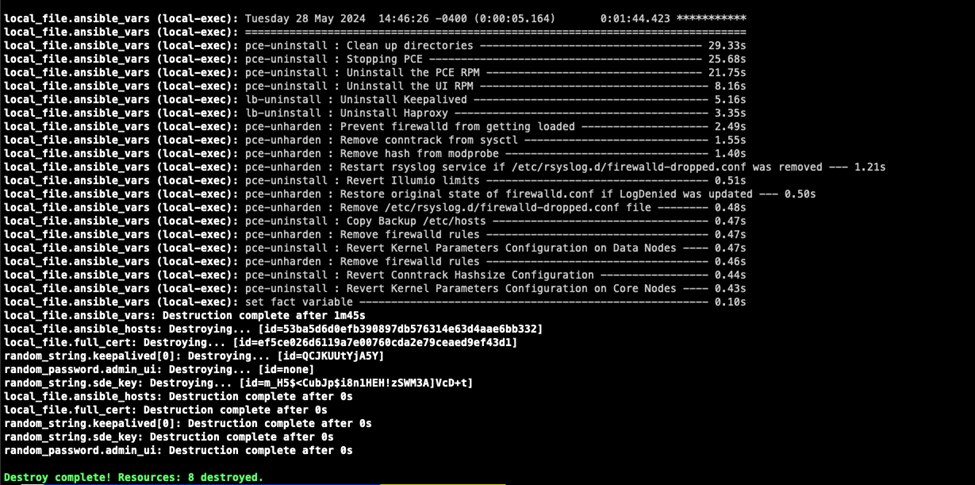

And when your done with your testing you can just run a Terragrunt destroy and it will remove all of the customizations and the Illumio PCE software. Then you are back to just a basic RHEL STIG image.

Appendix – STIG and Custom Configurations

Ilocron will fail to start the service due to fapolicyd, there is a fix implemented to update the trust file.

- name: Check status of the service

systemd_service:

name: fapolicyd

state: started

register: fapolicyd_status

ignore_errors: true

- name: Add ilocron dir to trusted fapolicy list

ansible.builtin.command:

cmd: fapolicyd-cli --file add /var/lib/illumio-pce/tmp/config/ilocron/ilocron/ --trust-file illumio

when: "fapolicyd_status.state == 'started'"

ignore_errors: true

- name: Add ilocron dir to trusted fapolicy list

ansible.builtin.command:

cmd: fapolicyd-cli --update

when: "fapolicyd_status.state == 'started'"

ignore_errors: true

PCE UI will throw an error because ntp is unable to be queried by the ilo-pce user.

- name: Append text to a file

ansible.builtin.lineinfile:

path: /etc/chrony.conf

line: "user ilo-pce"

insertafter: EOF

- name: Change ownership of /run/chrony

ansible.builtin.file:

path: /run/chrony

owner: ilo-pce

group: ilo-pce

recurse: yes

- name: "Restart Chronyd"

systemd_service:

name: chronyd

state: restarted

enabled: yes

masked: no

STIG requires firewalld to be active and configured, this creates the necessary rules and puts all dropped packets into separate logfile.

---

- name: Get connection information

shell: nmcli --terse --fields NAME connection show

register: connection_info

ignore_errors: true

changed_when: false

- name: Extract connection names

set_fact:

connection_names: "{{ connection_info.stdout_lines | list }}"

- name: "Startup Firewalld"

systemd_service:

name: firewalld

state: started

enabled: yes

masked: no

ignore_errors: true

when: "prefix_name in group_names"

- name: Check for Interfaces used

set_fact:

ansible_non_loopback_interfaces: "{{ ansible_interfaces | reject('search', '^lo$') | list }}"

- name: Define First interface

set_fact:

first_non_loopback_interface: "{{ ansible_non_loopback_interfaces | default([]) | first }}"

- name: Create new firewall zone

firewalld:

zone: illumio

state: present

permanent: true

- name: Copy configuration file for new zone

copy:

src: /usr/lib/firewalld/zones/drop.xml

dest: /etc/firewalld/zones/illumio.xml

remote_src: yes

- name: Always reload firewalld

ansible.builtin.service:

name: firewalld

state: reloaded

- name: Set default firewall zone

firewalld:

zone: illumio

interface: "{{ first_non_loopback_interface }}"

immediate: true

permanent: true

state: enabled

- name: Reload firewall configuration

firewalld:

state: enabled

- name: Configure Illumio Intra TCP Rules

firewalld:

zone: illumio

state: enabled

permanent: yes

immediate: yes

interface: "{{ first_non_loopback_interface }}"

- name: Define Illumio ports

set_fact:

illumio_intra_ports:

- "3100-3600"

- "5100-6300"

- "8000-8400"

- "11200-11300"

- "24200-25300"

- name: Configure Illumio Intra TCP Rules

firewalld:

zone: illumio

state: enabled

permanent: true

rich_rule: 'rule family="ipv4" priority="0" source address="{{ item.0 }}" port port="{{ item.1 }}" protocol="tcp" accept'

with_nested:

- "{{ ansible_play_batch }}"

- "{{ illumio_intra_ports }}"

- name: Configure Illumio Intra UDP Rules

firewalld:

zone: illumio

state: enabled

permanent: true

rich_rule: 'rule family="ipv4" priority="0" source address="{{ item }}" port port="8000-8400" protocol="udp" accept'

with_items: "{{ ansible_play_batch }}"

- name: Block other traffic to Illumio ports

firewalld:

zone: illumio

state: enabled

permanent: true

rich_rule: 'rule family="ipv4" priority="32000" source address="0.0.0.0/0" port port="{{ item.1 }}" protocol="tcp" drop'

with_nested:

- "{{ ansible_play_batch }}"

- "{{ illumio_intra_ports }}"

- name: Add port SSH to Firewalld

firewalld:

zone: illumio

service: ssh

permanent: yes

state: enabled

when: "prefix_name in group_names"

- name: Add port {{ mgmt_port }} to Firewalld

firewalld:

zone: illumio

port: "{{ mgmt_port }}/tcp"

permanent: yes

state: enabled

when: "'corenodes' in group_names"

- name: Add port {{ ven_lightning_port }} to Firewalld

firewalld:

zone: illumio

port: "{{ ven_lightning_port }}/tcp"

permanent: yes

state: enabled

when: "'corenodes' in group_names"

- name: Reload firewalld

command: firewall-cmd --reload

ignore_errors: yes

- name: "Reload Firewalld"

systemd_service:

name: firewalld

state: reloaded

enabled: yes

ignore_errors: true

when: "prefix_name in group_names"

- name: Set the zone for the NetworkManager connection

ansible.builtin.shell: "nmcli connection modify '{{ connection_names[0] }}' connection.zone illumio"

- name: Check if LogDenied is set to off

shell: grep -q -i '^LogDenied=off' /etc/firewalld/firewalld.conf

register: logdenied_check

ignore_errors: true

- name: Update LogDenied setting if necessary

ansible.builtin.shell: sed -i'Backup' 's/LogDenied=off/LogDenied=all/' /etc/firewalld/firewalld.conf

when: logdenied_check.rc == 0

- name: Output if LogDenied needed to be changed

debug:

msg: "LogDenied parameter has been updated."

when: logdenied_check.rc == 0

- name: Output if LogDenied was already correctly set

debug:

msg: "No changes required for LogDenied parameter."

when: logdenied_check.rc != 0

- name: Create /etc/rsyslog.d/firewalld-dropped.conf file

ansible.builtin.copy:

content: |

:msg,contains,"_DROP" /var/log/firewalld-dropped.log

:msg,contains,"_REJECT" /var/log/firewalld-dropped.log

& stop

dest: /etc/rsyslog.d/firewalld-dropped.conf

- name: Restart rsyslog service

ansible.builtin.service:

name: rsyslog

state: restarted

Its good practice to have a database backup available to restore in case of a catastrophe. This will back up the traffic and policy database every night. It will also backup the certificates and the runtime.yaml which is critical to PCE operation.

- name: "Copy PCE backup script"

template:

src: pcebackup

dest: /opt/illumio-pce/illumio/scripts/pcebackup

owner: ilo-pce

group: ilo-pce

mode: 0600

when: "prefix_name in group_names"

- name: Create the directory if it doesn't exist

file:

path: "/var/lib/illumio-pce/backup"

state: directory

group: ilo-pce

owner: ilo-pce

- name: Add daily policy database backup cron job to a specific user

cron:

name: "Daily policy PCE backup"

minute: "30"

hour: "1"

job: "/opt/illumio-pce/illumio/scripts/pcebackup -d /var/lib/illumio-pce/backup"

user: "root"

when: "prefix_name in group_names"

Here is the backup script.

#!/bin/bash

BASEDIR=$(dirname $0)

PROG=$(basename $0)

DTE=$(date '+%Y%m%d.%H%M%S')

HOSTNAME=$(hostname)

LOGFILE=""

VERSION_BUILD=""

REDIS_IP=""

datetime=$(date +"%Y-%m-%d_%H-%M-%S")

files=(

"/var/lib/illumio-pce/cert/server.crt"

"/var/lib/illumio-pce/cert/server.key"

"/etc/illumio-pce/runtime_env.yml"

)

log_print() {

LOG_DTE=$(date '+%Y-%m-%d %H:%M:%S')

echo "$LOG_DTE $1 $2"

echo "$LOG_DTE $1 $2" >> $LOGFILE

}

get_version_build() {

PVFILE="sudo -u ilo-pce cat /opt/illumio-pce/illumio/product_version.yml"

VERSION=$($PVFILE | grep version: | awk '{print $2}')

BUILD=$($PVFILE | grep build: | awk '{print $2}')

VERSION_BUILD="$VERSION-$BUILD"

}

is_redis_server() {

REDIS_IP=$(sudo -u ilo-pce illumio-pce-ctl cluster-status | grep agent_traffic_redis_server | awk '{ print $2}')

[ $(echo $(ip addr | grep -F $REDIS_IP | wc -l)) -gt 0 ] && return 0 || return 1

}

is_db_master() {

REDIS=$(sudo -u ilo-pce illumio-pce-ctl cluster-status | grep agent_traffic_redis_server | awk '{ print $2}')

DBMASTER=$(sudo -u ilo-pce illumio-pce-db-management show-primary | grep -c 'Database Primary Node IP address[^0-9]')

[ $DBMASTER -gt 0 ] && return 1 || return 0

}

usage() {

echo

echo "Usage: $0 -d <directory location> [-r retention_period] [-i SSH key file] [-u remote_user] [-h remote_host] [-p remote_path]"

echo " -d PCE backup direction location"

echo " -r Database backup retention period"

echo " Default is $RETENTION days"

echo " -i SSH key file."

echo " Default is ~/.ssh/id_rsa"

echo " -u SCP remote user"

echo " -h SCP remote host"

echo " -p SCP remote destination path"

echo

exit 1

}

pce_dbdump() {

local backup_file=$1

local backup_type=$2

log_print "INFO" "Dumpfile: $backup_file"

if [ $backup_type = "policydb" ]; then

log_print "INFO" "Backing up the policy database"

sudo -u ilo-pce illumio-pce-db-management dump --file $backup_file >> $LOGFILE

elif [ $backup_type = "trafficdb" ]; then

log_print "INFO" "Backing up the traffic database"

sudo -u ilo-pce illumio-pce-db-management traffic dump --file $backup_file >> $LOGFILE

fi

[ $? -gt 0 ] && log_print "ERROR" "Database backup failed!"

}

scp_remote_host() {

local dump_file="$1"

local ssh_key="$2"

local remote_user="$3"

local remote_host="$4"

local remote_path="$5"

log_print "INFO" "SCPing to remote host $remote_host "

log_print "INFO" "scp -i $ssh_key $dump_file $remote_user@$remote_host:$remote_path/."

scp -i $ssh_key "$dump_file" "$remote_user@$remote_host:$remote_path/."

}

backup_files() {

mkdir -p "$DUMPDIR" || { log_print "ERROR" "Failed to create directory $DUMPDIR"; exit 1; }

datetime=$(date +"%Y-%m-%d_%H-%M-%S")

backup_file="$DUMPDIR/pce_backup_$datetime.tar.gz"

tar -czf "$backup_file" -P "${@}" || { log_print "ERROR" "Failed to create backup archive"; exit 1; }

log_print "INFO" "Backup created successfully: $backup_file"

}

# Main Program

while getopts ":d:r:i:u:h:p:" opt; do

case $opt in

d) DUMPDIR="$OPTARG";;

r) RETENTION="$OPTARG";;

i) SSHKEY="$OPTARG";;

u) RMTUSER="$OPTARG";;

h) RMTHOST="$OPTARG";;

p) RMTPATH="$OPTARG";;

:) echo "Option -$OPTARG requires an argument." >&2; exit 1;;

*) usage;;

esac

done

shift $((OPTIND -1))

if [ -z "$DUMPDIR" ]; then

usage

exit 1

fi

LOGFILE="$DUMPDIR/$PROG.$DTE.log"

get_version_build

[ "$VERSION_BUILD" = "" ] && DMP_PREFIX="pcebackup" || DMP_PREFIX="pcebackup.$VERSION_BUILD"

[ -z "$SSHKEY" ] && SSHKEY="$(echo ~)/.ssh/id_rsa"

[ -z "$RETENTION" ] && RETENTION=7

if [ ! -d "$DUMPDIR" ]; then

log_print "ERROR" "Directory $DUMPDIR does not exist."

exit 1

fi

log_print "INFO" "Starting $PROG Database Backup"

log_print "INFO" "PCE Version : $VERSION_BUILD"

is_redis_server

log_print "INFO" "Redis Server: $REDIS_IP"

if is_db_master; then

pce_dbdump "$DUMPDIR/$DMP_PREFIX.policydb.$HOSTNAME.dbdump.$DTE" "policydb"

pce_dbdump "$DUMPDIR/$DMP_PREFIX.trafficdb.$HOSTNAME.dbdump.$DTE" "trafficdb"

if [ -n "$SSHKEY" ] && [ -n "$RMTUSER" ] && [ -n "$RMTHOST" ] && [ -n "$RMTPATH" ]; then

scp_remote_host "$DUMPDIR/$DMP_PREFIX.policydb.$HOSTNAME.dbdump.$DTE" "$SSHKEY" "$RMTUSER" "$RMTHOST" "$RMTPATH"

scp_remote_host "$DUMPDIR/$DMP_PREFIX.trafficdb.$HOSTNAME.dbdump.$DTE" "$SSHKEY" "$RMTUSER" "$RMTHOST" "$RMTPATH"

fi

tar -czf "$DUMPDIR/pce_backup_$datetime.tar.gz" "$DUMPDIR/$DMP_PREFIX.policydb.$HOSTNAME.dbdump.$DTE" "$DUMPDIR/$DMP_PREFIX.trafficdb.$HOSTNAME.dbdump.$DTE" -P "${files[@]}"

rm -f "$DUMPDIR/$DMP_PREFIX.policydb.$HOSTNAME.dbdump.$DTE" "$DUMPDIR/$DMP_PREFIX.trafficdb.$HOSTNAME.dbdump.$DTE"

else

backup_files "${files[@]}"

if [ -n "$SSHKEY" ] && [ -n "$RMTUSER" ] && [ -n "$RMTHOST" ] && [ -n "$RMTPATH" ]; then

scp_remote_host "$DUMPDIR/pce_backup_$datetime.tar.gz" "$SSHKEY" "$RMTUSER" "$RMTHOST" "$RMTPATH"

fi

fi

log_print "INFO" "Cleaning up old pcebackup files"

find "$DUMPDIR" \( -name "pce_backup_*" -o -name "pcebackup.*.log" \) -not -newermt $(date -d "$RETENTION days ago" +%Y-%m-%d) -exec rm {} +

log_print "INFO" "Completed $PROG Backup"